Adding Provenance and Giving Life to LLM Bunnies

Also: Train Daddy, Retroactive Funding, and Crampness of the Volume

For all the doomerism lately around AI, one of the fun things I saw this week, was this cute conversational bunny. An LLM with text-to-speech attached to a personality provides such fun and wide application, I’m surprised it’s not bigger yet.

It reminded me of this scene from Her, with Theodore interacting with a (funny and rude) game character and it being quite conversational (this film is increasingly prescient).

Where this kind of conversational LLMs go from here will get interesting.

They can stay in their digital screens as-is.

They can potentially also accrue more corporeal bodies: actual limbs and so on (oh hey, I Robot?).

They can increase their legitimacy as a character by attaching provenance to them.

The latter, essentially would entail that one attempts to imbue these characters with more life by making them into NFTs. I’ve written before about the value of using a blockchain to add legimitacy to cultural artefacts.

The value is derived from what the NFT represents and the immutable timestamping engine of the blockchain helps to legitimise a specific reference. There are various ‘signals’ that are codified such that it makes it desirable to own even though the token is separate from the reference.

While the image itself is (usually) immutably codified and adds a large proportion of desirability, it’s also very important as to who “signed off” on the NFT. Much of the value is derived because it is a blessing of legitimacy through timestamped cryptographic signatures from specific people: it’s all about “the signature”.

It’s a focal point, legitimacy engine that keeps and embeds social relationships through time towards signed cultural objects.

So, blockchaining these characters would allow them accrue significance and legitimacy through time. Even though the interaction wouldn’t be any different (like seeing the JPEG through an NFT or not), what you would get is the added social and cultural context writ into them.

In other words, given two LLM bunnies, it’s more meaningful to interact with one that you *know* has history to it vs the one that’s just there, without context. That’s how artwork, over time, has always swallowed up more meaning: its provenance attracts value over time.

There are companies tackling this intersection, like Alethea AI. From creating conversational characters:

…to using existing NFTs and imbuing them with intelligence.

It’s a bit janky at the moment, and the idea can also be a bit cringe if done incorrectly. But, there’s something here. Perhaps, one variation could be the use of a similar housing done by Beeple for HUMAN ONE.

The artwork is the housing. And perhaps, this ‘portal’, is just that. A portal into a parallel world where the LLM bunnies roam.

A tangential project is Finiliar, where the cute characters react to price feeds and their form is often promoted through “living in” watches and phones.

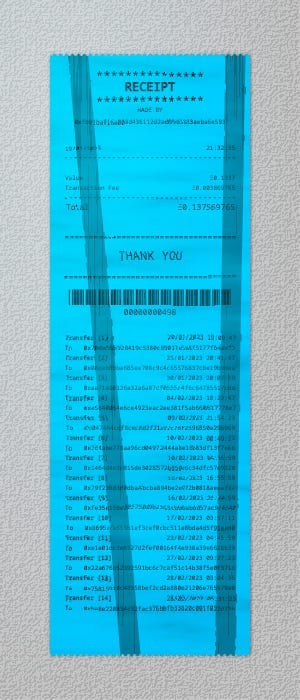

To be honest, I don’t think there’s been a character-based NFT project that has truly succeeded in surfacing the history and provenance of the “shared, signed cultural object” as a primary mode of experience. It’s usually just imbued (if you know it’s an NFT). While you get art projects that do this, like 0xHaiku’s Receipt, recording all its underlying transfers and having it automatically displayed as a part of the artwork.

We will hopefully continue see interesting mashups in this intersection, from the artistic (like Finiliar), to the LLM (Uncle Rabbit), to technical innovations (like giving wallets to NFTs themselves).

Optimism’s Retroactive Funding Round 2

Speaking of provenance… Optimism’s push for retroactive funding has been interesting to follow. Its major seconding funding round had just completed.

Because you have an immutable record, retroactive funding works by looking at what had transpired and rewarding folks for their contributions to the network. On a long enough timescale, it present an interesting toolbox where you could use “time as a platform”. If retroactive funding is successful, it actually incentivises people to interact with any blockchain, just because, because an unknown future can reward you for it.

How you allocate these kinds of rewards is still an ongoing debate. With this round, there’s been commentary for and against it looking a bit too balanced.

I think it’s at least interesting that it tries to cater to diversity at the long-tail.

The $3B 270 Park Ave

I must admit, this building, set to be the headquarters of JP Morgan Chase, is peak NYC for me. I’m a big fan of the design. It’s what I miss the most of living in NYC. There’s an acute sense that the city is alive, growing, and changing every day.

Train Daddy

Every industry has its characters. If you are in it, you get to know them. For most, you only get glimpses of the movers and shakers. While I love all things city and urbanism, I’d never heard of Andy Byford, a seeming celebrity in the transport industry. It was both interesting to learn more of him, but also to get a deeper look into the scene of the transport industry.

The Crampness of the “Volume”

The Mandalorian famously introduced the “volume”. The sound stage that allows one to film scenes where an actual screen in the background with CGI is used to put the characters in the scene, as well as give it more realistic lighting. In some cases, it’s been used to great effect. You can see how it works here:

While I enjoyed the new Ant-Man, it’s not the first time that I’ve seen commentary on how the volume can actually cause unique problems with scene blocking and the sense of scale. This video goes into some lengths with regards to how the volume can actually make the world feel cramped and small.

The problem it creates is that large crowd shots at short-to-mid-range have to be CGI, which when budgets have to be balanced look like you have to make the following choices:

Create extreme focus on the characters and blur the CGI in the background

Blocking the scenes in such a way that the crowds wouldn’t really be visible, creating awkward static shots (like discussed in the video).

There’s fewer shots in the middle.

If you take a look at the scenes from Coruscant in the Mandalorian, you can see that sometimes, they just choose blur the background to focus on the characters (not necessarily a bad choice), but, it’s such that it becomes obvious and noticeable after a while. It does create a dreamy atmosphere, sure, but at the cost of it becoming cramped and feeling small. A lot of the Mandalorian is excessively background blurred.

This is in contrast to Andor, that uses real-life locations where it feels more organic.

I hope there’s a balance to be found here.

salute & Sammy Virji - 'Peach'

This week’s song is immaculate. Such a groove to it, with flowing arps, and great punching bassline. Really great song to run to. Enjoy!

That’s it for this week!

Enjoy a sunset. :)

Simon

Have you seen Altered State Machine: https://www.alteredstatemachine.xyz