Evaluating Prediction Markets

Also: Exercise Paradox, Olympics, and Orchestral Beck

Prediction markets are having a bit of a moment. Polymarket alone racked up $213 million of volume in July.

Last year, I wrote about how it’s increasingly treated as more of a social venue:

Social innovation around prediction markets can definitely introduce more people to it. It’s not a replacement for your other news sources, but it’s definitely unique in the type of news you get from it. It’s sometimes much quicker to drop into a prediction market to get the zeitgeist vs trying to shuffle between Twitter/X’s poor search, going to specific social media accounts, or hopping around mainstream media outlets.

It’s great to see it (finally) become more popular. I don’t bet, but I still find it great as a gravity well for information. When money is on the line, people tend to bring information that supports their predictions. It’s a curator.

However, one thing I’ve found is that those who espouse it are treating it as 1) infallible, and 2) in competition with mainstream media, social media, or other avenues of information production (like, polling). For the latter, you want media and information that’s produced for different reasons and incentives.

For the former, it’s prediction market survivor bias. Events that weren’t popular or did not go in the way that was newsworthy is merely forgotten. The bias also takes the following form.

It’s the belief that if an outcome is 70% for a while and eventually becomes true, it’s seen as “look how right it was.”

It’s the belief that if an outcome is 15% for a while and suddenly flips to true, it’s seen as “look! The mainstream media didn’t even this likelihood of happening!”

It’s never wrong.

This is taken as proof that Biden was going to drop out, hovering at 25% for quite a while. A success!

In the inverse, this chart could be seen as accurately predicting that there was a small chance BJP wasn’t going to get a majority in the Indian elections. A success!

Another great example that Manifold themselves tout as successful, ignores the alternative.

The alternatives are markets that hovered around 40% and then went to zero (didn’t happen).

So, depending on your lens, it’s always right, which is obviously not a useful way to evaluate their use beyond being betting platforms and as a news source.

It begs the question, if you want to evaluate a prediction market, how do you do it? I was curious and did some research.

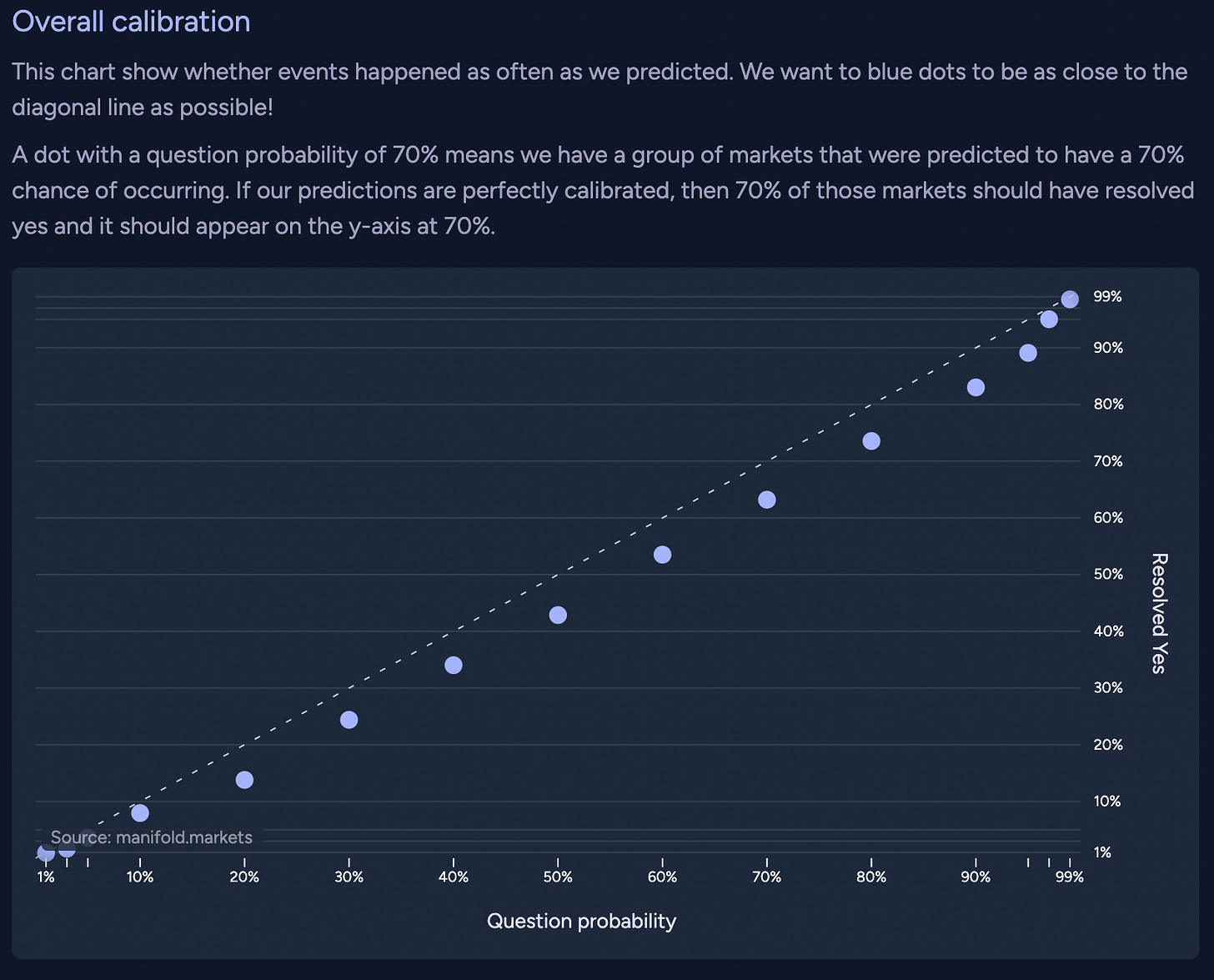

An oft-used metric is to judge how well calibrated it is: over the entire prediction market, do trades with a given percentage resolve that percentage of times? Put it differently, an outcome that are 40% likely should happen 40% of the time. It is then regarded as well calibrated.

The simplest formula takes all the trades and checks if it did or did not happen and assigns a score to it. An oft-used scoring rule is using Brier Scores, which is (probability - outcome)^2. By squaring, it means that a trade that’s close to an outcome (eg, 90% → 100%) is less penalised than one that is far from the outcome (eg, 10% → 100%).

A 21% trade (0.21) that happened (1), is scored as: (0.21-1)^2 = 0.6241.

0 is perfect (eg, a 100% prediction that happened). 1 is the worst (eg, a 100% prediction that didn’t happen).

When you combine all these scores together and you divide it by the number of scores, you get the combined Brier Score: where a score closer to zero than 1 is better.

When you combine all probabilities against the actual outcomes, you get a calibration plot. The closer the dots to the line, the better. This one is Manifold’s own (a play-money prediction market platform).

This simple scoring rule, however, does not account for *when* the trade occurred. Thus, each probability can be weighted in different ways.

Manifold analyses itself by weighting each trade by its initial and final probability to account for the average probability.

eg, If you start a bet at 10% and “ride” it to “90%” before exiting the trade, then the average probability is: (10+90)/2 = 50%. If a person is willing to stay in a trade as the market changes, that is an additional signal that matches their convictions.

But, this does not account how long the time difference is between prediction and outcome. eg, if you want to forecast rain, a 90% prediction happening 20 minutes before the rain arrives because you could see some dark clouds is markedly different to a 90% trade of rain coming that happened 24 hours ago.

Metaculus goes further by including additional weighting:

Metaculus aggregates the predictions together using a model that calibrates and weights them based on factors like recency and the predictors' track records, to produce an aggregate prediction (called the Metaculus Prediction) that's ideally better than the best individual. Metaculus also provides a simpler and "dumber" aggregate called the Community Prediction, which is simply the median of recent predictions - I also included this for comparison.

While calibration and brier scores are useful, it also isn’t the entire picture.

In some instances it is the case that a well calibrated market doesn’t always imply a good Brier Score. Eigil Rischel explains with an example:

Philip Tetlock also describes how a set of predictions can be well calibrated, but not really be *useful* (screencap at 21:41).

If all your predictions hover around 50% (give or take a few percentage points), you are essentially saying that you perform well at slightly better than a coin toss. As Philip Tetlock describes it: never going above or below minor shades of maybe.

Whereas omniscience would look like this:

In other words, you’re able to say that something will happen definitively at any point in time and it will happen (and vice versa).

Because you ultimately don’t have control groups, you have to combine predictions together in order to determine if it was actually good at what it’s aiming to do. In some cases, prediction markets have been compared to polls when it’s used in elections. Metaculus beat 538 but both beat all the other prediction markets. Another analyst found similar results.

Bundling them by platform has some merit, because a play-money platform will have different distortions to a real money platform. eg, play-money won’t have to deal with the time cost of money, but there’s less actual skin-in-the-game.

With enough trades, each trader might also accrue a score. And so you could compare traders against other traders (as Metaculus to some extent includes in their scoring).

It could also be that analytical outfits could group markets for comparison in unique ways: across platforms, across traders, across trades, across liquidity, across topical domains, etc.

In conclusion, prediction markets are growing up and attracting serious volume. It definitely still needs innovation in the social realm and as a news source. But, I also think that it needs a lot more innovation in scoring and analysis. Such that when someone says: “Look, it helped predict the future!” we can actually score it properly rather than rely on supporters espousing it because they are vested in its success. I don’t know what that actually looks like, but looking forward to seeing more people try. If you have more sources and readings, please share!

Bonus Content!

📚 Reading - At the Existentialist Café by Sarah Bakewell

Taking a break from fiction and reading this after recommendation from my wife. Extremely fascinating history of the existentialists in the 20th century. As the winds of change are currently blowing, one particular part has stood out so far. The desire from Sartre to find a philosophy of wisdom, not a philosophy of contemplation just before WW2 broke out. Confusing times lead to an ideological desire of how to live, not abstract questions on why.

🕹 Playing - Balatro

Yes, I’ve been deep into roguelikes as a genre for a while and still playing this when I can. I also like that it feels like there’s a lot of depth here over time. Definitely feel this is going to be great for upcoming travels.

📺 Watching - The Curse

Still going through Nathan Fielder’s work. The Curse is quite different. Some of it is quite slow, but then sometimes that slowness, having it breathe is really great. Choosing to watch The Curse in a more meditative way.

👨🏻💻 Work - New Novel

About 20-30% through draft 2 of my new novel. It’s all been a really fun challenge as the book is partly about the lives we keep in private and secret. So, I have to juggle a lot of minds interacting in complex ways with each other. I also bought my first iPad this week, primarily, because I’m actually spending most of time writing these days as opposed to coding or more serious workhorse work (like audio and video production). It’s also just nicer to travel with. Been enjoying using it as a writing tool!

🏃🏻♂️ Running - Too Damn Hot

I’ve been training for a fall half-marathon. Another go at last year’s half marathon. Aiming to get under 2hr comfortably, but the heat has been unbearable. It’s really curtailed the amount of running I’ve been wanting to do. Can’t even get tempo/speed work in and I detest running on treadmills. Hopefully it cools down soon!

🌐 Links

Olympics

It’s the Olympics! It’s been interesting to see what Paris has opted to do different. Of the modern era it has built the fewest new structures. Quite smart.

Exercise & Fitness

It’s been interesting to follow the recent research on how one’s body adapts to exercise. It tends to return to a baseline of how much calories it burns. Thus, it means that your body is both 1) getting better at using the energy it has to burn, and 2) it’s routing the energy from well-intentioned systems that are actually harmful (like your immune system having too much energy and thus resulting in remaining chronically inflamed). Definitely makes one rethink one’s relationship to exercise and health.

Descending From Ancestors

This was super interesting. I was not aware how chromosomes are spliced from our ancestors.

🎶 Music

Beck - Paper Tiger (Live)

Beck is my favorite musician. He’s been touring recently playing with orchestras across the US and was fortunate enough to catch him playing with the National Symphony Orchestra. His range is incredible and the slower orchestral songs is incredible to hear live. So, here’s a rendition of Paper Tiger. Enjoy!

That’s it for this week folks! Testing a new layout too. I find that people have enjoyed me sharing just what I’m generally up to each week. So leaning more into that. :)

Hope you get to see a lovely sunset. See you next week!

Simon

Exactly the kind of analysis I'm looking for re prediction markets. Appreciate you sharing some of your thinking!